It provides methods for performing extrinsic and intrinsic evaluation of a n-gram model. It also provides a method for comparing performance of multiple n-gram models.

Intrinsic evaluation is based on calculation of Perplexity. Extrinsic evaluation involves determining the percentage of correct next word predictions.

Details

Before performing the intrinsic and extrinsic model evaluation, a validation file must be first generated. This can be done using the DataSampler class.

Each line in the validation file is evaluated. For intrinsic evaluation Perplexity for the line is calculated. An overall summary of the Perplexity calculations is returned. It includes the min, max and mean Perplexity.

For extrinsic evaluation, next word prediction is performed on each line. If the actual next word is one of the three predicted next words, then the prediction is considered to be accurate. The extrinsic evaluation returns the percentage of correct and incorrect predictions.

Super class

wordpredictor::Base -> ModelEvaluator

Methods

Method new()

It initializes the current object. It is used to set the model file name and verbose options.

Usage

ModelEvaluator$new(mf = NULL, ve = 0)Method compare_performance()

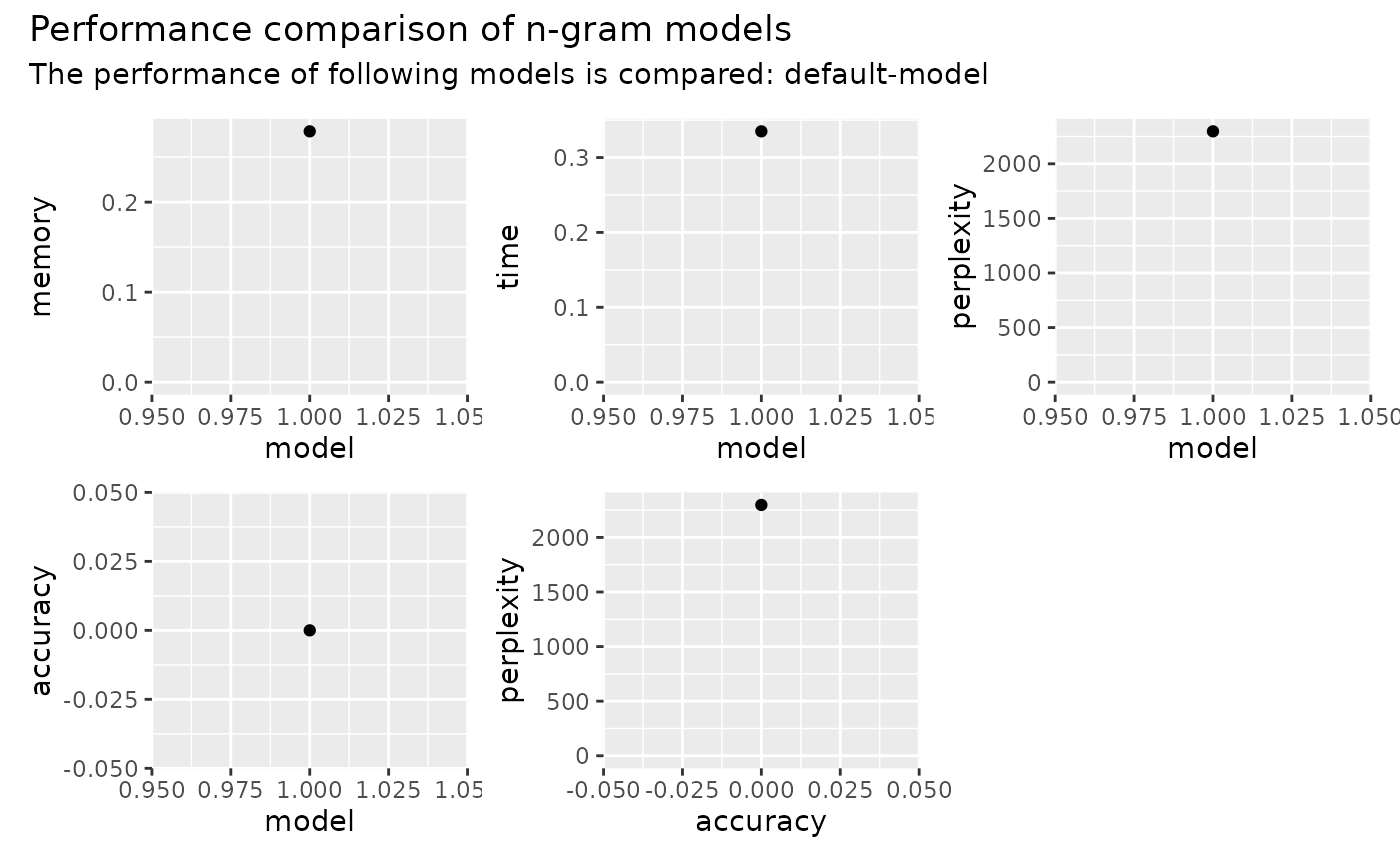

It compares the performance of the models in the given folder.

The performance of the model is compared for the 4 metric which are time taken, memory used, Perplexity and accuracy. The performance comparison is displayed on plots.

4 plots are displayed. One for each performance metric. A fifth plot shows the variation of Perplexity with accuracy. All 5 plots are plotted on one page.

Arguments

optsThe options for comparing model performance.

save_to. The graphics device to save the plot to. NULL implies plot is printed.

dir. The directory containing the model file, plot and stats.

Examples

# Start of environment setup code

# The level of detail in the information messages

ve <- 0

# The name of the folder that will contain all the files. It will be

# created in the current directory. NULL implies tempdir will be

# used.

fn <- NULL

# The required files. They are default files that are part of the

# package

rf <- c("def-model.RDS")

# An object of class EnvManager is created

em <- EnvManager$new(ve = ve, rp = "./")

# The required files are downloaded

ed <- em$setup_env(rf, fn)

# End of environment setup code

# ModelEvaluator class object is created

me <- ModelEvaluator$new(ve = ve)

# The performance evaluation is performed

me$compare_performance(opts = list(

"save_to" = NULL,

"dir" = ed

))

# The test environment is removed. Comment the below line, so the

# files generated by the function can be viewed

em$td_env()Method plot_stats()

It plots the given stats on 5 plots. The plots are displayed on a single page.

The 4 performance metrics which are time taken, memory, Perplexity and accuracy are plotted against the model name. Another plot compares Perplexity with accuracy for each model.

Method evaluate_performance()

It performs intrinsic and extrinsic evaluation for the given model and validation text file. The given number of lines in the validation file are used in the evaluation

It performs two types of evaluations. One is intrinsic evaluation, based on Perplexity, the other is extrinsic evaluation based on accuracy.

It returns the results of evaluation. 4 evaluation metrics are returned. Perplexity, accuracy, memory and time taken. Memory is the size of the model object. Time taken is the time needed for performing both evaluations.

The results of the model evaluation are saved within the model object and also returned.

Arguments

lcThe number of lines of text in the validation file to be used for the evaluation.

fnThe name of the validation file. If it does not exist, then the default file validation-clean.txt is checked in the models folder

Examples

# Start of environment setup code

# The level of detail in the information messages

ve <- 0

# The name of the folder that will contain all the files. It will be

# created in the current directory. NULL implies tempdir will be used

fn <- NULL

# The required files. They are default files that are part of the

# package

rf <- c("def-model.RDS", "validate-clean.txt")

# An object of class EnvManager is created

em <- EnvManager$new(ve = ve, rp = "./")

# The required files are downloaded

ed <- em$setup_env(rf, fn)

# End of environment setup code

# The model file name

mfn <- paste0(ed, "/def-model.RDS")

# The validation file name

vfn <- paste0(ed, "/validate-clean.txt")

# ModelEvaluator class object is created

me <- ModelEvaluator$new(mf = mfn, ve = ve)

# The performance evaluation is performed

stats <- me$evaluate_performance(lc = 20, fn = vfn)

# The evaluation stats are printed

print(stats)

# The test environment is removed. Comment the below line, so the

# files generated by the function can be viewed

em$td_env()Method intrinsic_evaluation()

Evaluates the model using intrinsic evaluation based on Perplexity. The given number of sentences are taken from the validation file. For each sentence, the Perplexity is calculated.

Arguments

lcThe number of lines of text in the validation file to be used for the evaluation.

fnThe name of the validation file. If it does not exist, then the default file validation-clean.txt is checked in the models folder

Examples

# Start of environment setup code

# The level of detail in the information messages

ve <- 0

# The name of the folder that will contain all the files. It will be

# created in the current directory. NULL implies tempdir will be used

fn <- NULL

# The required files. They are default files that are part of the

# package

rf <- c("def-model.RDS", "validate-clean.txt")

# An object of class EnvManager is created

em <- EnvManager$new(ve = ve, rp = "./")

# The required files are downloaded

ed <- em$setup_env(rf, fn)

# End of environment setup code

# The model file name

mfn <- paste0(ed, "/def-model.RDS")

# The validation file name

vfn <- paste0(ed, "/validate-clean.txt")

# ModelEvaluator class object is created

me <- ModelEvaluator$new(mf = mfn, ve = ve)

# The intrinsic evaluation is performed

stats <- me$intrinsic_evaluation(lc = 20, fn = vfn)

# The evaluation stats are printed

print(stats)

# The test environment is removed. Comment the below line, so the

# files generated by the function can be viewed

em$td_env()Method extrinsic_evaluation()

Evaluates the model using extrinsic evaluation based on Accuracy. The given number of sentences are taken from the validation file.

For each sentence, the model is used to predict the next word. The accuracy stats are returned. A prediction is considered to be correct if one of the predicted words matches the actual word.

Arguments

lcThe number of lines of text in the validation file to be used for the evaluation.

fnThe name of the validation file.

Examples

# Start of environment setup code

# The level of detail in the information messages

ve <- 0

# The name of the folder that will contain all the files. It will be

# created in the current directory. NULL implies tempdir will be used

fn <- NULL

# The required files. They are default files that are part of the

# package

rf <- c("def-model.RDS", "validate-clean.txt")

# An object of class EnvManager is created

em <- EnvManager$new(ve = ve, rp = "./")

# The required files are downloaded

ed <- em$setup_env(rf, fn)

# End of environment setup code

# The model file name

mfn <- paste0(ed, "/def-model.RDS")

# The validation file name

vfn <- paste0(ed, "/validate-clean.txt")

# ModelEvaluator class object is created

me <- ModelEvaluator$new(mf = mfn, ve = ve)

# The intrinsic evaluation is performed

stats <- me$extrinsic_evaluation(lc = 100, fn = vfn)

# The evaluation stats are printed

print(stats)

# The test environment is removed. Comment the below line, so the

# files generated by the function can be viewed

em$td_env()Examples

## ------------------------------------------------

## Method `ModelEvaluator$compare_performance`

## ------------------------------------------------

# Start of environment setup code

# The level of detail in the information messages

ve <- 0

# The name of the folder that will contain all the files. It will be

# created in the current directory. NULL implies tempdir will be

# used.

fn <- NULL

# The required files. They are default files that are part of the

# package

rf <- c("def-model.RDS")

# An object of class EnvManager is created

em <- EnvManager$new(ve = ve, rp = "./")

# The required files are downloaded

ed <- em$setup_env(rf, fn)

# End of environment setup code

# ModelEvaluator class object is created

me <- ModelEvaluator$new(ve = ve)

# The performance evaluation is performed

me$compare_performance(opts = list(

"save_to" = NULL,

"dir" = ed

))

# The test environment is removed. Comment the below line, so the

# files generated by the function can be viewed

em$td_env()

## ------------------------------------------------

## Method `ModelEvaluator$evaluate_performance`

## ------------------------------------------------

# Start of environment setup code

# The level of detail in the information messages

ve <- 0

# The name of the folder that will contain all the files. It will be

# created in the current directory. NULL implies tempdir will be used

fn <- NULL

# The required files. They are default files that are part of the

# package

rf <- c("def-model.RDS", "validate-clean.txt")

# An object of class EnvManager is created

em <- EnvManager$new(ve = ve, rp = "./")

# The required files are downloaded

ed <- em$setup_env(rf, fn)

# End of environment setup code

# The model file name

mfn <- paste0(ed, "/def-model.RDS")

# The validation file name

vfn <- paste0(ed, "/validate-clean.txt")

# ModelEvaluator class object is created

me <- ModelEvaluator$new(mf = mfn, ve = ve)

# The performance evaluation is performed

stats <- me$evaluate_performance(lc = 20, fn = vfn)

# The evaluation stats are printed

print(stats)

#> $m

#> [1] 279320

#>

#> $t

#> [1] 0.111

#>

#> $p

#> [1] 2297.35

#>

#> $a

#> [1] 0

#>

# The test environment is removed. Comment the below line, so the

# files generated by the function can be viewed

em$td_env()

## ------------------------------------------------

## Method `ModelEvaluator$intrinsic_evaluation`

## ------------------------------------------------

# Start of environment setup code

# The level of detail in the information messages

ve <- 0

# The name of the folder that will contain all the files. It will be

# created in the current directory. NULL implies tempdir will be used

fn <- NULL

# The required files. They are default files that are part of the

# package

rf <- c("def-model.RDS", "validate-clean.txt")

# An object of class EnvManager is created

em <- EnvManager$new(ve = ve, rp = "./")

# The required files are downloaded

ed <- em$setup_env(rf, fn)

# End of environment setup code

# The model file name

mfn <- paste0(ed, "/def-model.RDS")

# The validation file name

vfn <- paste0(ed, "/validate-clean.txt")

# ModelEvaluator class object is created

me <- ModelEvaluator$new(mf = mfn, ve = ve)

# The intrinsic evaluation is performed

stats <- me$intrinsic_evaluation(lc = 20, fn = vfn)

# The evaluation stats are printed

print(stats)

#> $min

#> [1] 282

#>

#> $max

#> [1] 8248

#>

#> $mean

#> [1] 2297.35

#>

# The test environment is removed. Comment the below line, so the

# files generated by the function can be viewed

em$td_env()

## ------------------------------------------------

## Method `ModelEvaluator$extrinsic_evaluation`

## ------------------------------------------------

# Start of environment setup code

# The level of detail in the information messages

ve <- 0

# The name of the folder that will contain all the files. It will be

# created in the current directory. NULL implies tempdir will be used

fn <- NULL

# The required files. They are default files that are part of the

# package

rf <- c("def-model.RDS", "validate-clean.txt")

# An object of class EnvManager is created

em <- EnvManager$new(ve = ve, rp = "./")

# The required files are downloaded

ed <- em$setup_env(rf, fn)

# End of environment setup code

# The model file name

mfn <- paste0(ed, "/def-model.RDS")

# The validation file name

vfn <- paste0(ed, "/validate-clean.txt")

# ModelEvaluator class object is created

me <- ModelEvaluator$new(mf = mfn, ve = ve)

# The intrinsic evaluation is performed

stats <- me$extrinsic_evaluation(lc = 100, fn = vfn)

# The evaluation stats are printed

print(stats)

#> $valid

#> [1] 1

#>

#> $invalid

#> [1] 74

#>

#> $valid_perc

#> [1] 1.333333

#>

#> $invalid_perc

#> [1] 98.66667

#>

# The test environment is removed. Comment the below line, so the

# files generated by the function can be viewed

em$td_env()

# The test environment is removed. Comment the below line, so the

# files generated by the function can be viewed

em$td_env()

## ------------------------------------------------

## Method `ModelEvaluator$evaluate_performance`

## ------------------------------------------------

# Start of environment setup code

# The level of detail in the information messages

ve <- 0

# The name of the folder that will contain all the files. It will be

# created in the current directory. NULL implies tempdir will be used

fn <- NULL

# The required files. They are default files that are part of the

# package

rf <- c("def-model.RDS", "validate-clean.txt")

# An object of class EnvManager is created

em <- EnvManager$new(ve = ve, rp = "./")

# The required files are downloaded

ed <- em$setup_env(rf, fn)

# End of environment setup code

# The model file name

mfn <- paste0(ed, "/def-model.RDS")

# The validation file name

vfn <- paste0(ed, "/validate-clean.txt")

# ModelEvaluator class object is created

me <- ModelEvaluator$new(mf = mfn, ve = ve)

# The performance evaluation is performed

stats <- me$evaluate_performance(lc = 20, fn = vfn)

# The evaluation stats are printed

print(stats)

#> $m

#> [1] 279320

#>

#> $t

#> [1] 0.111

#>

#> $p

#> [1] 2297.35

#>

#> $a

#> [1] 0

#>

# The test environment is removed. Comment the below line, so the

# files generated by the function can be viewed

em$td_env()

## ------------------------------------------------

## Method `ModelEvaluator$intrinsic_evaluation`

## ------------------------------------------------

# Start of environment setup code

# The level of detail in the information messages

ve <- 0

# The name of the folder that will contain all the files. It will be

# created in the current directory. NULL implies tempdir will be used

fn <- NULL

# The required files. They are default files that are part of the

# package

rf <- c("def-model.RDS", "validate-clean.txt")

# An object of class EnvManager is created

em <- EnvManager$new(ve = ve, rp = "./")

# The required files are downloaded

ed <- em$setup_env(rf, fn)

# End of environment setup code

# The model file name

mfn <- paste0(ed, "/def-model.RDS")

# The validation file name

vfn <- paste0(ed, "/validate-clean.txt")

# ModelEvaluator class object is created

me <- ModelEvaluator$new(mf = mfn, ve = ve)

# The intrinsic evaluation is performed

stats <- me$intrinsic_evaluation(lc = 20, fn = vfn)

# The evaluation stats are printed

print(stats)

#> $min

#> [1] 282

#>

#> $max

#> [1] 8248

#>

#> $mean

#> [1] 2297.35

#>

# The test environment is removed. Comment the below line, so the

# files generated by the function can be viewed

em$td_env()

## ------------------------------------------------

## Method `ModelEvaluator$extrinsic_evaluation`

## ------------------------------------------------

# Start of environment setup code

# The level of detail in the information messages

ve <- 0

# The name of the folder that will contain all the files. It will be

# created in the current directory. NULL implies tempdir will be used

fn <- NULL

# The required files. They are default files that are part of the

# package

rf <- c("def-model.RDS", "validate-clean.txt")

# An object of class EnvManager is created

em <- EnvManager$new(ve = ve, rp = "./")

# The required files are downloaded

ed <- em$setup_env(rf, fn)

# End of environment setup code

# The model file name

mfn <- paste0(ed, "/def-model.RDS")

# The validation file name

vfn <- paste0(ed, "/validate-clean.txt")

# ModelEvaluator class object is created

me <- ModelEvaluator$new(mf = mfn, ve = ve)

# The intrinsic evaluation is performed

stats <- me$extrinsic_evaluation(lc = 100, fn = vfn)

# The evaluation stats are printed

print(stats)

#> $valid

#> [1] 1

#>

#> $invalid

#> [1] 74

#>

#> $valid_perc

#> [1] 1.333333

#>

#> $invalid_perc

#> [1] 98.66667

#>

# The test environment is removed. Comment the below line, so the

# files generated by the function can be viewed

em$td_env()